Eye-Tracking-Based Classification Using Machine Learning

Eye-Tracking-Based Classification of Information Search Behavior Using Machine Learning: Evidence from Experiments in Physical Shops and Virtual Reality Shopping Environments

Classifying information search behavior helps tailor recommender systems to individual customers’ shopping motives. But how can we identify these motives withoutrequiring users to exert too much effort? Our research goal is to demonstrate that eyetracking can be used at the point of sale to do so. We focus on two frequently investigated shopping motives: goal-directed and exploratory search. To train and test a prediction model, we conducted two eye-tracking experiments in front of supermarket shelves.

The first experiment was carried out in immersive virtual reality; the second, in physical reality—in other words, as a field study in a real supermarket. We conducted a virtual reality study, because recently launched virtual shopping environments suggest that there is great interest in using this technology as a retail channel. Our empirical results show that support vector machines allow the correct classification of search motives with 80% accuracy in virtual reality and 85% accuracy in physical reality. Our findings also imply that eye movements allow shopping motives to be identified relatively early in the search process: our models achieve 70% prediction accuracy after only 15 seconds in virtual reality and 75% in physical reality. Applying an ensemble method increases the prediction accuracy substantially, to about 90%. Consequently, the approach that we propose could be used for the satisfiable classification of consumers in practice. Furthermore, both environments’ best predictor variables overlap substantially. This finding provides evidence that in virtual reality, information search behavior might be similar to the one used in physical reality. Finally, we also discuss managerial implications for retailers and companies that are planning to use our technology to personalize a consumer assistance system.

https://pubsonline.informs.org/doi/10.1287/isre.2019.0907

Identify Customer Preferences in Virtual Reality using Eye-Tracking Data for Machine-Learning Based Time Series Classification

Due to enforced endeavors towards realizing the metaverse, we see vivid technical advancement of Virtual and Augmented Reality hardware. An increasing number of head-mounted displays are equipped with eye trackers. These goggles allow to capture gaze information on-the-fly. Such gaze information in Virtual Reality (VR) applications might soon serve as features for recommender systems which learn user preferences on-the-fly when shopping in VR. Drawing on the eye-mind hypothesis, we imagine a recommender system that predicts customer health-consciousness. We propose to treat gaze information as time series and use a deep time series classifier for inference.

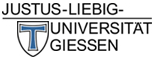

Figure 1. Eye tracking data as time series.

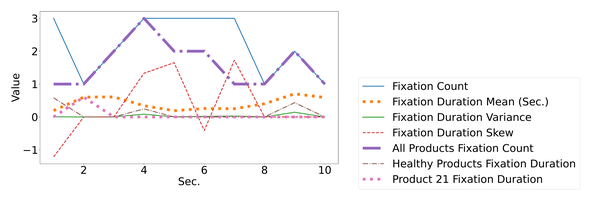

Figure 2. Deep time series classifier for inference.

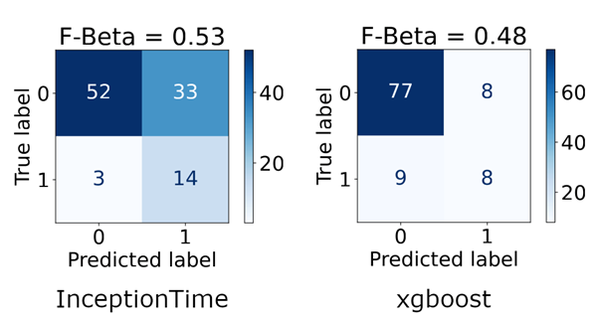

Our results are based on data from a large-scale laboratory experiment. Classification results indicate superior performance compared to a shallow gradient boosting baseline. This study enables researchers and practitioners to judge whether using time series classification for gaze data is reasonable to generate features for recommender systems in virtual and augmented purchase scenarios.

Figure 3. Confusion matrices.